The UK’s greatest disaster textual content line for individuals needing pressing psychological well being help gave third-party researchers entry to hundreds of thousands of messages from youngsters and different weak customers regardless of a promise by no means to take action.

Shout, a helpline launched with a £3m funding from the Royal Basis of the Duke and Duchess of Cambridge, says it gives a confidential service for individuals struggling to deal with points akin to suicidal ideas, self-harm, abuse and bullying.

An FAQ part on its web site had mentioned that whereas “anonymised and aggregated”, “high-level information” from messages was handed to trusted educational companions for analysis to enhance the service, “particular person conversations can not and won’t ever be shared”.

However that promise was deleted from the location final 12 months, and entry to conversations with hundreds of thousands of individuals – together with youngsters beneath 13 – has since been given to third-party researchers, the Observer has discovered.

Psychological Well being Improvements, the charity that runs the helpline, mentioned all customers agreed to phrases of service that allowed information to be shared with researchers for research that will “finally profit those that use our service and the broader inhabitants”.

However the findings have led to a backlash amongst privateness specialists, information ethicists and individuals who use the helpline, who mentioned the info sharing raised moral considerations.

Greater than 10.8m messages from 271,445 conversations between February 2018 and April 2020 had been utilized in a undertaking with Imperial Faculty London geared toward creating instruments that use synthetic intelligence to foretell behaviour, together with as an example, suicidal ideas.

Private identifiers together with names and cellphone numbers had been eliminated earlier than the messages had been analysed, in accordance with Shout, which says the messages had been “anonymised”.

However information used within the analysis included “full conversations” about individuals’s private issues, in accordance with the examine.

A part of the analysis was geared toward gleaning private details about the texters from their messages, akin to their age, gender and incapacity, to get a greater understanding of who was utilizing the service.

Cori Crider, a lawyer and co-founder of Foxglove, an advocacy group for digital rights, mentioned giving researchers entry to the messages raised “critical moral questions”. “Belief is all the pieces in well being – and in psychological well being most of all. While you begin by saying in your FAQs that ‘particular person conversations can not and won’t ever be shared’; and abruptly transfer to coaching AI on lots of of hundreds of ‘full conversations’, you’ve left behind the sentiments and expectations of the weak individuals you serve,” she mentioned. “It’s significantly unhappy as a result of this will set again correct consented analysis by years. Lack of belief in these techniques can discourage determined individuals from coming ahead.”

Others raised considerations that individuals utilizing the service couldn’t really comprehend how their messages could be used when at disaster level – even when they had been advised it was going for use in analysis – due to their weak state. About 40% of individuals texting the helpline are suicidal, in accordance with Shout, and lots of are beneath 18.

Claire Maillet, 30, who used the service for help with anxiousness and an consuming dysfunction in 2019 and 2020, mentioned she had no concept messages might be used for analysis. “My understanding was that the dialog would keep between me and the particular person I used to be messaging and wouldn’t go any additional,” she mentioned. “If I had recognized that it could, then I can’t say for sure that I nonetheless would have used the service.”

Maillet mentioned she “completely understood” the necessity to conduct analysis with a purpose to “make providers higher” in circumstances the place individuals might give their knowledgeable consent.

However she mentioned utilizing conversations from a disaster helpline raised moral considerations, even when names had been eliminated, because of the nature of what’s being mentioned.

“While you’re at that disaster level, you’re not pondering, ‘Will this data be used for analysis?’ You possibly can spin it in a manner that makes it sound good, but it surely looks like they’re exploiting vulnerability in a manner.”

Phil Sales space, coordinator of medConfidential, which campaigns for the privateness of well being information, mentioned it was “unreasonable to anticipate a youngster or any particular person in disaster to have the ability to perceive” that their conversations might be used for functions aside from offering them help. “There are clearly explanation why Shout may have to retain information: nobody is denying that. It’s these different makes use of: they’re simply not what individuals would anticipate.”

He added that it was “deceptive” and “plainly unsuitable” that the charity had advised customers that their particular person conversations wouldn’t be shared. “In the event you make a promise like that it’s best to follow it,” he mentioned.

Shout was arrange in 2019 as a legacy of Heads Collectively, a marketing campaign led by the Duke and Duchess of Cambridge and Prince Harry geared toward ending stigma round psychological well being. The aim of the service is to offer round the clock psychological well being help to individuals within the UK, lots of whom battle to entry overstretched NHS providers, who can textual content a quantity and obtain a response from a educated volunteer.

Psychological Well being Improvements states {that a} key aim is to make use of “anonymised, aggregated information to generate distinctive insights into the psychological well being of the UK inhabitants”.

“We use these insights to boost our providers, to tell the event of latest sources and merchandise, and to report on tendencies of curiosity for the broader psychological well being sector,” it says.

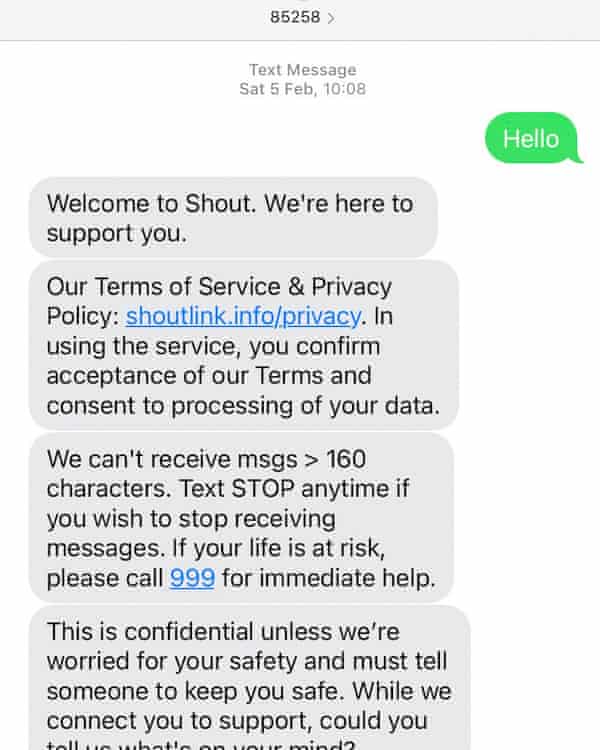

When service customers first contact Shout, they’re advised: “That is confidential except we’re fearful in your security and should inform somebody to maintain you secure.” They’re additionally despatched a hyperlink to a textual content with a hyperlink to the web site and privateness coverage with the message: “In utilizing the service you affirm acceptance of our phrases and consent to processing of your information.”

Nonetheless, earlier variations of the FAQs made a promise that has since been deleted, that means individuals who texted the service up to now – together with these whose messages had been used within the analysis – are more likely to have learn data that was later modified.

An archived model of the helpline’s web site from March 2020 reveals that, within the FAQ part, customers had been advised: “We anonymise and combination high-level information from conversations with a purpose to examine and enhance our personal efficiency. We share a few of this information with rigorously chosen and screened educational companions, however the information is at all times anonymised, particular person conversations can not and won’t ever be shared.”

In late spring 2021, the FAQ part was up to date and the promise about particular person conversations eliminated. The privateness coverage was additionally up to date to say that in addition to sharing information, it might be used to “allow a deeper understanding of the psychological well being panorama within the UK extra usually”, opening the door to a broader vary of makes use of.

The Imperial examine, printed in December 2021, concerned evaluation of “complete conversations” manually and utilizing synthetic intelligence, in accordance with the paper. To enhance the service, it had three objectives: predicting the dialog levels of messages, predicting behaviours from texters and volunteers and “classifying full conversations to extrapolate demographic data” – as an example by scanning messages for issues akin to numbers or mentions of faculty life, which could point out a person’s age.

“Trying ahead, we goal to coach fashions to categorise labels that might be thought of immediately actionable or present a direct measure of dialog efficacy. For instance, a key future goal is prediction of a texter’s danger of suicide,” wrote the researchers.

Information was dealt with in a trusted analysis atmosphere and the examine acquired ethics approval from Imperial, which mentioned the analysis “totally complied” with “stringent moral evaluate insurance policies” and offered “essential insights”.

However Dr Sandra Leaton Grey, affiliate professor of training at College Faculty London, an professional on the consequences of know-how on youngsters and younger individuals, mentioned that as a researcher she noticed “numerous purple flags” with the secondary information use, together with that parental consent was not sought.

Dr Christopher Burr, an ethics fellow and professional in AI on the Alan Turing Institute, mentioned accountable information evaluation might deliver large advantages. However he mentioned not being clear sufficient with customers about how delicate conversations could be used created a “tradition of mistrust”.

“They must be doing extra to make sure their customers are meaningfully knowledgeable about what’s occurring,” he mentioned.

The considerations about Shout comply with an investigation within the US by the information web site Politico that uncovered a data-sharing partnership between an American psychological well being charity and a for-profit firm.

Shout is the UK counterpart of Disaster Textual content Line US, which confronted intense scrutiny earlier this month over its dealings with Loris.AI, an organization that guarantees to assist corporations “deal with their arduous buyer conversations with empathy”.

After particulars of the partnership with Loris got here to mild, Disaster Textual content Line reduce ties with the organisation, and UK customers had been assured that their information had not been shared with Loris.

In addition to working with educational researchers, Psychological Well being Improvements has fashioned partnerships with non-public our bodies together with Hive Studying, a tech firm.

Hive, whose purchasers embrace Boots and Deloitte, labored with Shout to create an AI-powered psychological well being programme that the agency sells to corporations to assist them “maintain mass behaviour change at scale”. It’s owned by Blenheim Chalcot, “the UK’s main digital enterprise builder”, which was based by Shout trustee Charles Mindenhall.

Psychological Well being Improvements mentioned “no information referring to any texter conversations” had been shared with Hive and that its coaching programme was “developed with learnings from our volunteer coaching mannequin”.

In a press release, the charitysaid that every one information shared with educational researchers, together with Imperial, had particulars together with names, addresses, metropolis names, postcodes, e mail addresses, URLs, cellphone numbers and social media handles eliminated, and claimed it was “extremely unlikely” that it might be tied to people.

It mentioned searching for parental consent earlier than utilizing the info in analysis “would make the service inaccessible for a lot of youngsters and younger individuals”, including that it understood that texters “determined for rapid assist … might not be capable of learn the phrases and circumstances that we ship earlier than they begin their dialog with us”, however that the privateness coverage remained out there for them to learn at a later stage. In the event that they later determined to withdraw consent, they may textual content “Loofah” to the helpline quantity and would have their data deleted, a spokeswoman mentioned.

Victoria Hornby, Psychological Well being Improvements’ chief govt, added that the dataset constructed from Shout conversations was “distinctive” as a result of it contained details about “individuals in their very own phrases”, and will unlock large advantages in psychological well being analysis.

She mentioned the promise about particular person conversations by no means being shared was within the FAQs, somewhat than the precise privateness coverage, and had been faraway from the web site as a result of it might be “simply misunderstood” attributable to there being “completely different interpretations” of what “particular person conversations” meant. “We didn’t need it to be misunderstood so we modified it,” she mentioned.

The Info Commissioner’s Workplace, the UK’s information safety watchdog, mentioned it was assessing proof referring to Shout and Psychological Well being Improvements’ dealing with of person information.

A spokeswoman mentioned: “When dealing with and sharing individuals’s well being information, particularly youngsters’s information, organisations have to take further care and put safeguards in place to make sure their information shouldn’t be used or shared in methods they’d not anticipate. We’ll assess the knowledge and make enquiries on this matter.”

Within the UK and Eire, Samaritans could be contacted on 116 123 or e mail jo@samaritans.org or jo@samaritans.ie.